[Webinar] Harnessing the Power of Data Streaming Platforms | Register Now

What’s New in Confluent Cloud Security

Get started with Confluent, for free

Watch demo: Kafka streaming in 10 minutes

Today, the ability to capture and harness the value of data in real time is critical for businesses to remain competitive in a data-driven world. Apache Kafka®, a scalable, open-source, event streaming platform has become the de facto solution for companies leading the way in building real-time event streaming apps. Kafka is now at the heart of thousands of mission-critical and revenue-generating applications that turn real-time events into valuable business outcomes, which means it is more important than ever to ensure the information it processes is properly secured and meets regulatory compliance.

Confluent Cloud, a complete event streaming platform that is secure by default, reduces the risk of Apache Kafka security breaches without hindering agile development. You can rely on our expertise to manage infrastructure and instead focus on your applications. This empowers you to innovate and take your applications to production much faster.

Confluent Cloud currently provides several key security features, including:

- Authentication: We provide SAML/SSO so that you can utilize your identity provider to authenticate user logins to Confluent Platform.

- Authorization: We provide granular access control through service accounts/ACLs so that users can gate application access to critical Kafka resources like topics and consumer groups.

- Data confidentiality: We encrypt all data at rest by default. Also, all the network traffic to clients (data in transit) is encrypted with TLS 1.2 (TLS 1.0 and 1.1 are no longer supported and now disabled).

- Private networking: We also provide VPC peering so that you can utilize secure and private networking.

In light of this month’s Project Metamorphosis theme, Secure, we would like to share about our ongoing efforts to build a secure service that meets the needs of some of the most security-conscious and regulated industries. We have been working on several features that provide additional data security, access controls, and activity monitoring:

- Private networking: Private-Link support ensures that all data transferred over the network is secure and private

- Data confidentiality: Bring Your Own Key (BYOK) provides you with the ability to encrypt data at rest, while offering more control so that you can disable access if needed

- Audit Logs track the record of users/application access to resources that could be used to identify anomalies and bad actors

- Authorization: Role-Based Access Control (RBAC) enables you to set granular permissions for user access and gates access to critical resources like production environments

The combination of these features provide you with a complete set of enterprise-grade security features to confidently develop event-driven applications in the cloud:

General availability of BYOK on AWS

On July 14th, we initiated the public preview of Bring Your Own Key (BYOK) encryption support on dedicated clusters on AWS. After positive feedback from our customers, we made this feature generally available on October 1st. This has been one of the most requested features for several reasons:

- Data at rest encryption with your own key is a must have and often a blocker of adoption and expansion, especially those with strong InfoSec requirements.

- BYOK encryption provides a high level of confidence that customers have control over their data at rest access, especially when it comes to potential government access to data on drives (subpoena, etc.).

- BYOK encryption is the fundamental security feature that showcases our commitment to security. It is a key factor for driving enterprise security capabilities.

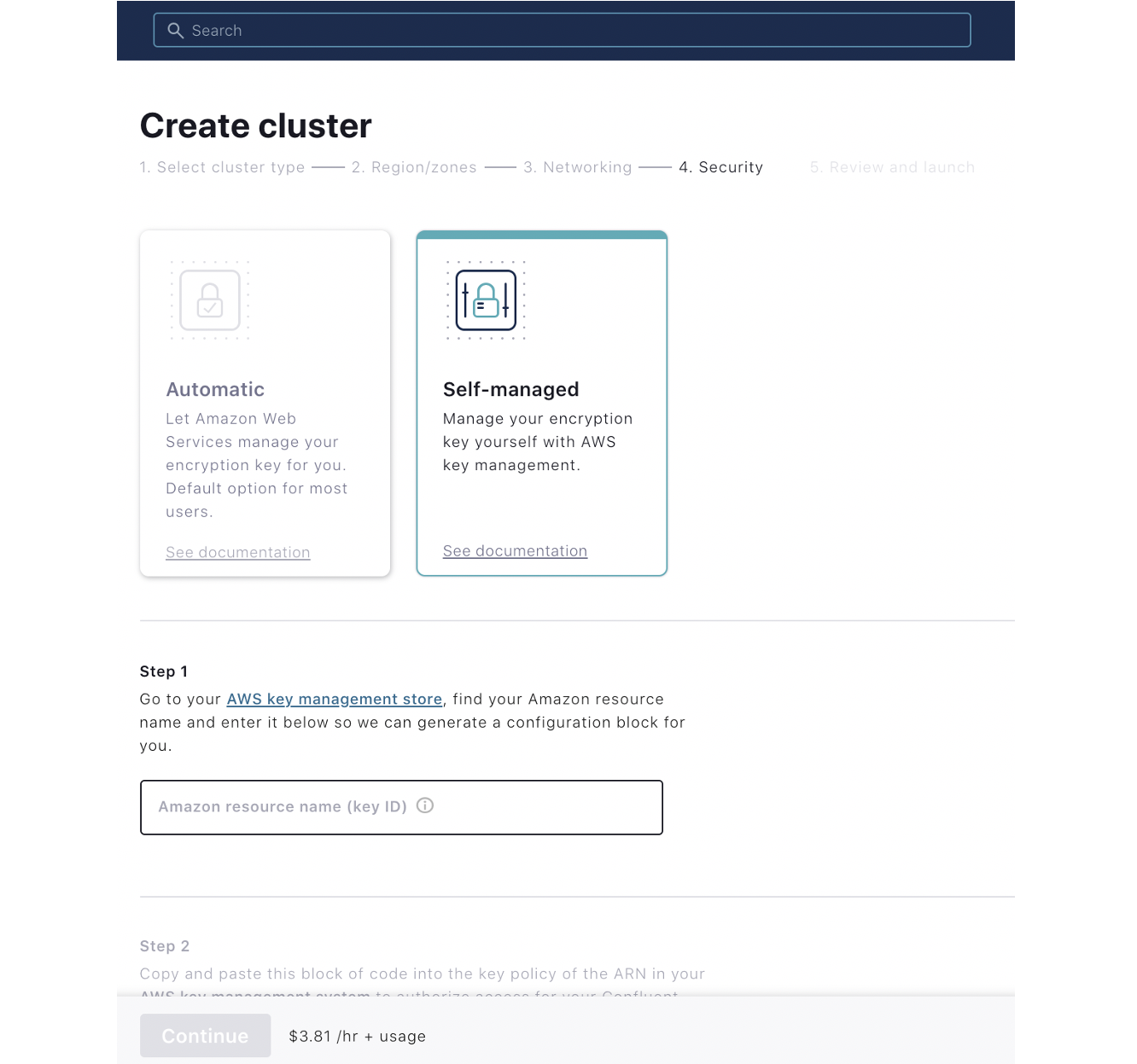

BYOK can be easily enabled at the time when a user provisions a Dedicated cluster. Here is the BYOK workflow:

-

- Choose between using the default encryption mechanism (Automatic) or utilizing BYOK (Self-managed):

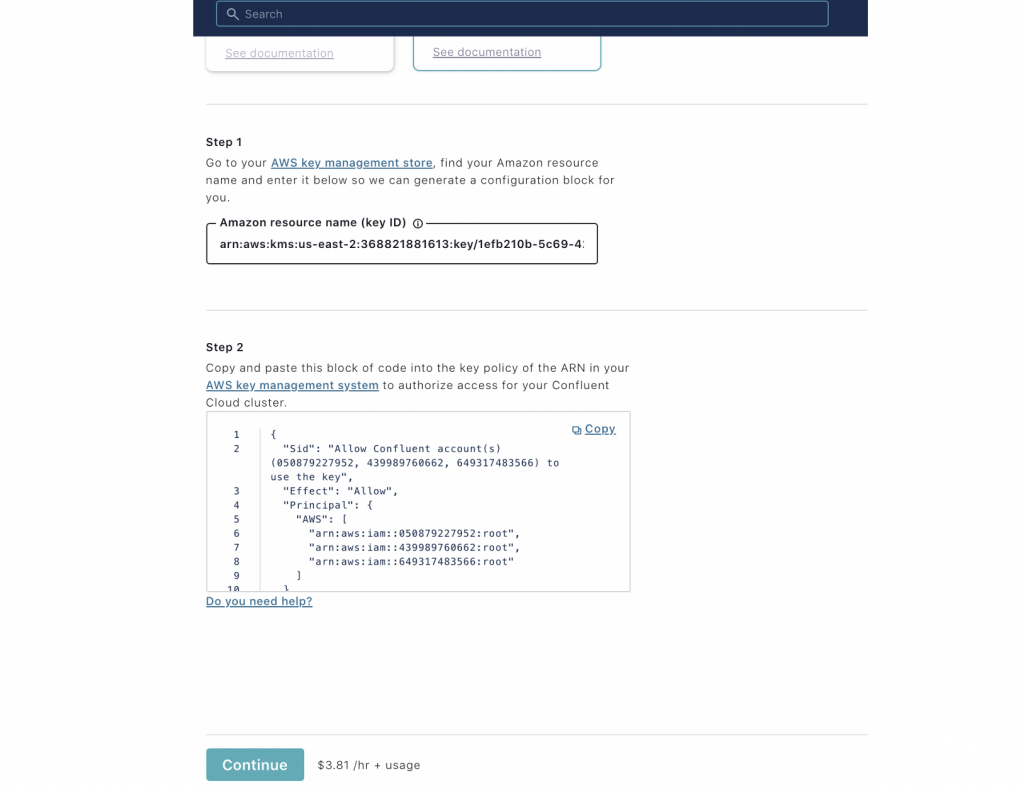

- If you choose to use BYOK, provide an Amazon Resource Name (ARN) that points to the master key on the AWS Key Management Service (KMS), which will be used for encryption.

- Grant Confluent permission to utilize the key for encryption/decryption-related operations:

- Choose between using the default encryption mechanism (Automatic) or utilizing BYOK (Self-managed):

Note that BYOK is only available on Confluent Cloud Dedicated clusters on AWS and works only with AWS KMS. Also, after a cluster is created, its encryption setting cannot be changed.

We are actively working on BYOK on Google Cloud. Adding support for BYOK on Azure is also on our roadmap.

Preview of Audit Logs

Our engineering team has been working hard to provide security Audit Logs so that you can track access to critical resources by users and applications. Audit Logs enable you to identify anomalies or bad actors to protect against unwanted access.

Our focus for this preview is to allow you to audit interactions with your Kafka clusters, while we capture authentication events and authorization events for cluster management actions (for example, creating/deleting topics). All of these events are captured in a Kafka topic. You can utilize third-party tools for viewing/managing logs by consuming from the Audit Log topic (which is like any Kafka topic) and sending it to your platform of choice.

In the future, we plan to add audit events related to platform (or organization)-level operations like creating/deleting environments, clusters, etc.

Audit Logs are enabled by default so you don’t have to take any actions. As mentioned earlier, all of these events are captured in a Kafka topic, and you can consume from this topic like any other Kafka topic. Note that Kafka cluster Audit Logs will be available only for Dedicated and Standard clusters.

Here is an example of an Audit Log showing that a user has made a Kafka CreateTopics API request, resulting in an authorization check:

{

"data": {

"serviceName": "crn:///kafka=lkc-682o6",

"methodName": "kafka.CreateTopics",

"resourceName": "crn:///kafka=lkc-682o6",

"authenticationInfo": {

"principal": "User:116649"

},

"authorizationInfo": {

"granted": true,

"operation": "Create",

"resourceType": "Cluster",

"resourceName": "kafka-cluster",

"patternType": "LITERAL",

"superUserAuthorization": true

},

"request": {

"correlation_id": "92",

"client_id": "adminclient-845"

},

},

"id": "904ecc7a-bea6-49d9-9799-93871eea07f7",

"source": "crn:///kafka=lkc-682o6",

"specversion": "1.0",

"type": "io.confluent.kafka.server/authorization",

"datacontenttype": "application/json",

"subject": "crn:///kafka=lkc-682o6",

"time": "2020-09-30T21:00:33.226Z",

"confluentRouting": {

"route": "lkc-15v9v_confluent-audit-log-events"

}

}

Preview of Cluster RBAC

You can currently gate access to Kafka resources like topics through the use of service accounts and Access Control Lists (ACLs).

We plan to make the preview of Cluster RBAC available soon. This will enable you to:

- Assign the following roles to users:

- The OrganizationAdmin role allows you to access and manage all the resources within the organization.

- The EnvironmentAdmin role allows you to access and manage all the resources within the scoped environment.

- The ClusterAdmin role allows you to access and manage all the resources within the scoped cluster.

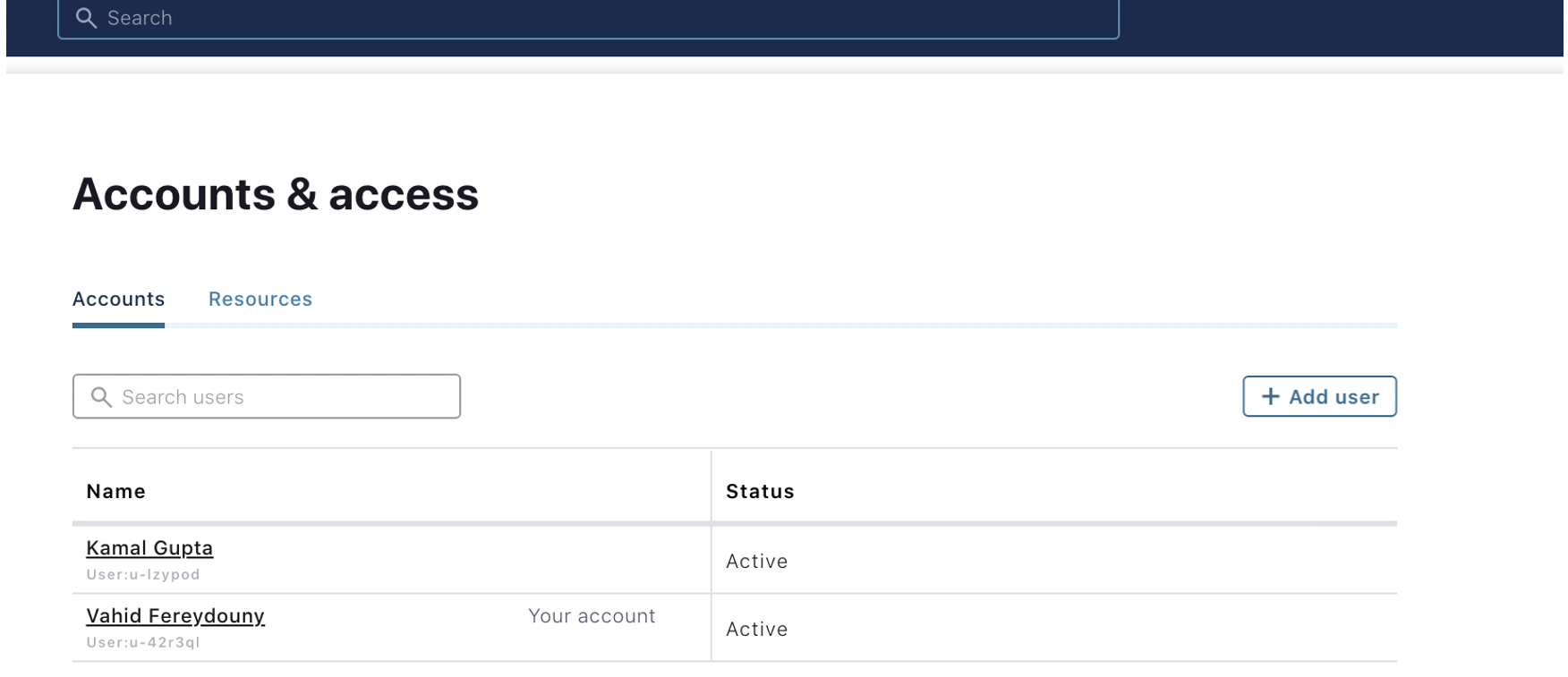

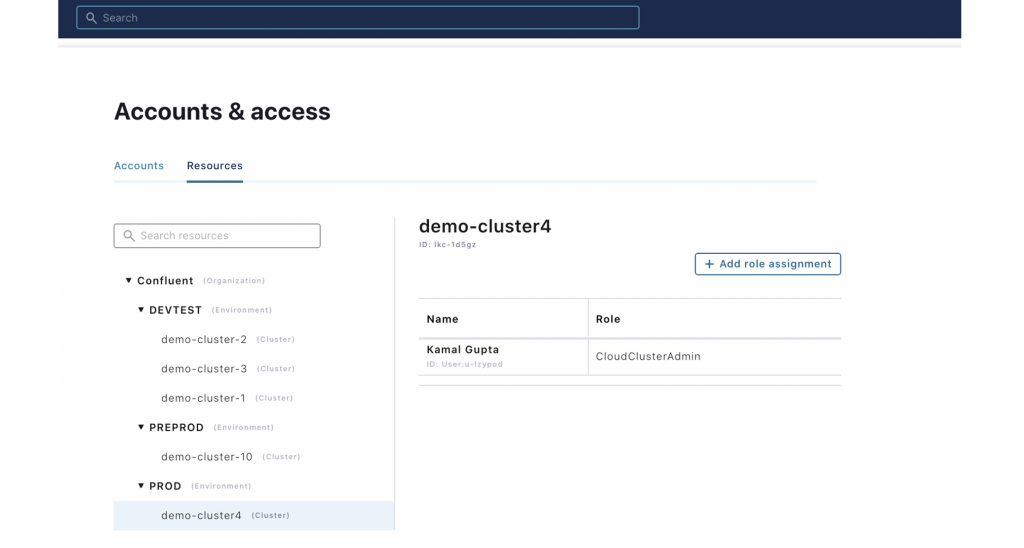

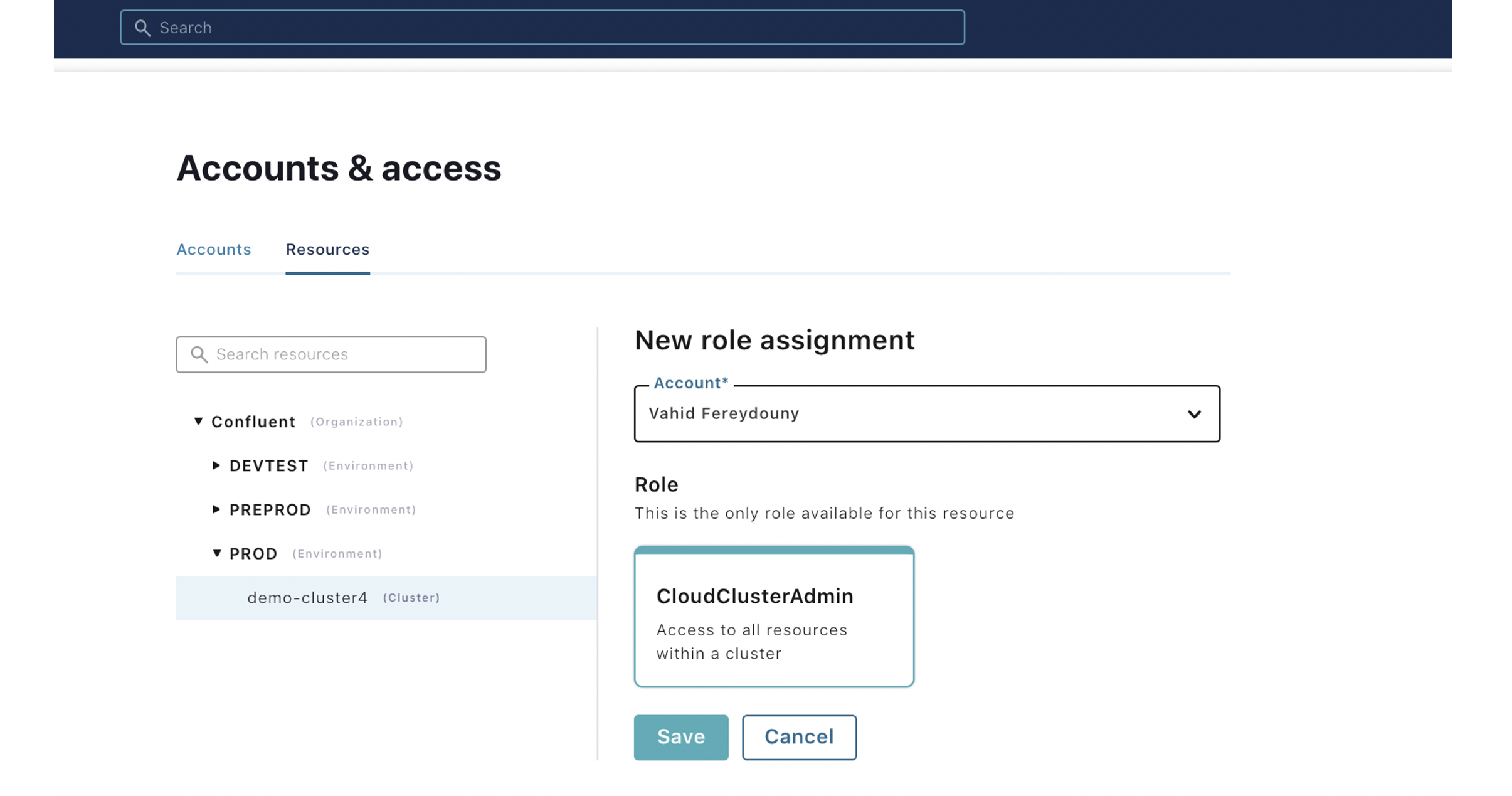

- View the list of the users and their roles and also be able to see the list of the resources and view who has access to them.

- Enforce access based on the users’ role (provide access to resources as permitted by user roles for which they are scoped). For example, if a user is a ClusterAdmin for cluster 1 under the TESTDEV environment, then that user can only access the resources within Cluster 1. This user can’t manage Cluster 2 in the TESTDEV environment or any other clusters in the PRODUCTION environment.

Cluster RBAC enables you to onboard users without giving them broad access to all resources. It also enables you to scope permissions so that they can rapidly iterate on applications as they develop them, without compromising the security and stability of the production applications.

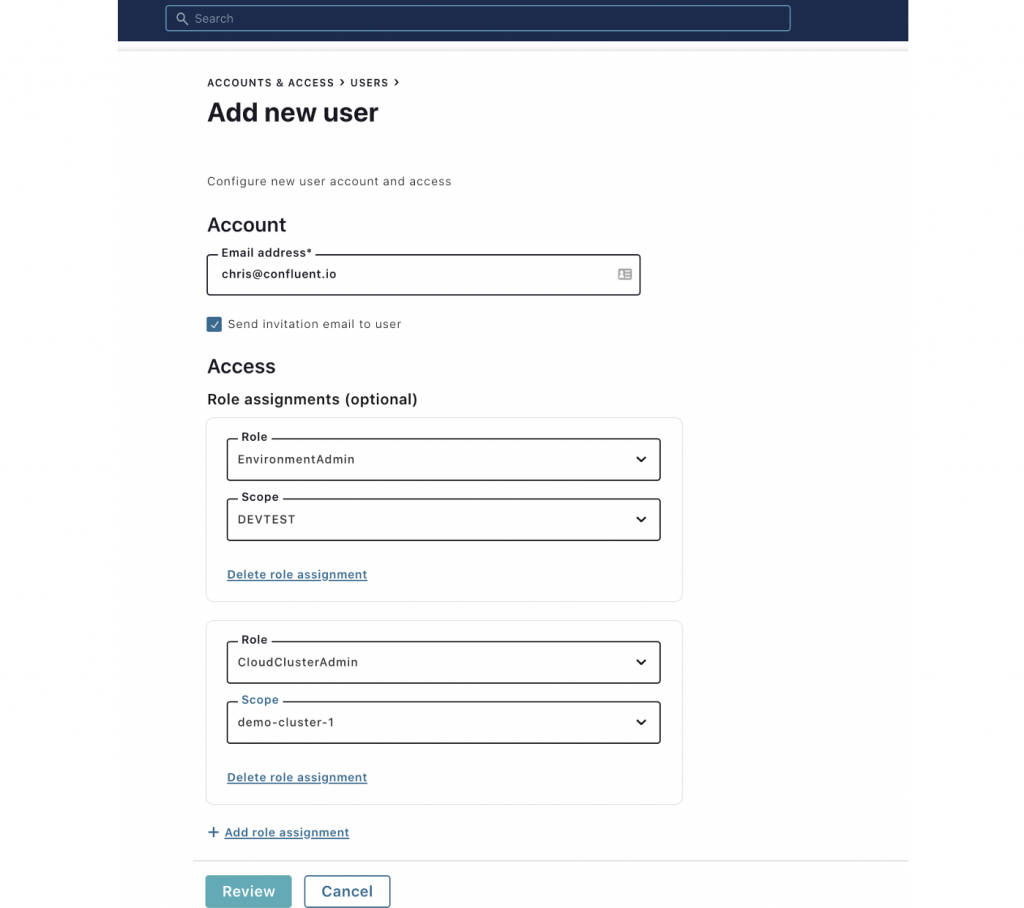

The following workflow shows how an admin can invite users and set their roles:

-

- View the list of the users:

- Invite a new user and grant them different roles. Notice that a user can have multiple roles:

- View the resource hierarchy and view/manage the role binding for a given resource:

- View the list of the users:

What’s next

We are actively working to make Role-Based Access Control and Audit Logs generally available. We also plan to extend BYOK for Dedicated clusters on Google Cloud and Azure as well.

Because Kafka security can be complicated, we are focused on providing easy-to-manage yet comprehensive security so that you can focus on your applications and business outcomes.

To get started with these features and the most secure, fully managed event streaming platform powered by Kafka, sign up for Confluent Cloud today. In addition, you can use the promo code CL60BLOG for an additional $60 of free Confluent Cloud usage and register for this online talk to see a live demo.*

Get started with Confluent, for free

Watch demo: Kafka streaming in 10 minutes

Ist dieser Blog-Beitrag interessant? Jetzt teilen

Confluent-Blog abonnieren

Schema Registry Clients in Action

Learn about the bits and bytes of what happens behind the scenes in the Apache Kafka producer and consumer clients when communicating with the Schema Registry and serializing and deserializing messages.

How to Securely Connect Confluent Cloud with Services on Amazon Web Services (AWS), Azure, and Google Cloud Platform (GCP)

The rise of fully managed cloud services fundamentally changed the technology landscape and introduced benefits like increased flexibility, accelerated deployment, and reduced downtime. Confluent offers a portfolio of fully managed...